One of the important steps in any data platform is making sure you keep following the best practices and recommendations for building a well-architected architecture. If not, costs will skyrocket, performance will deteriorate and end-users won’t be happy.

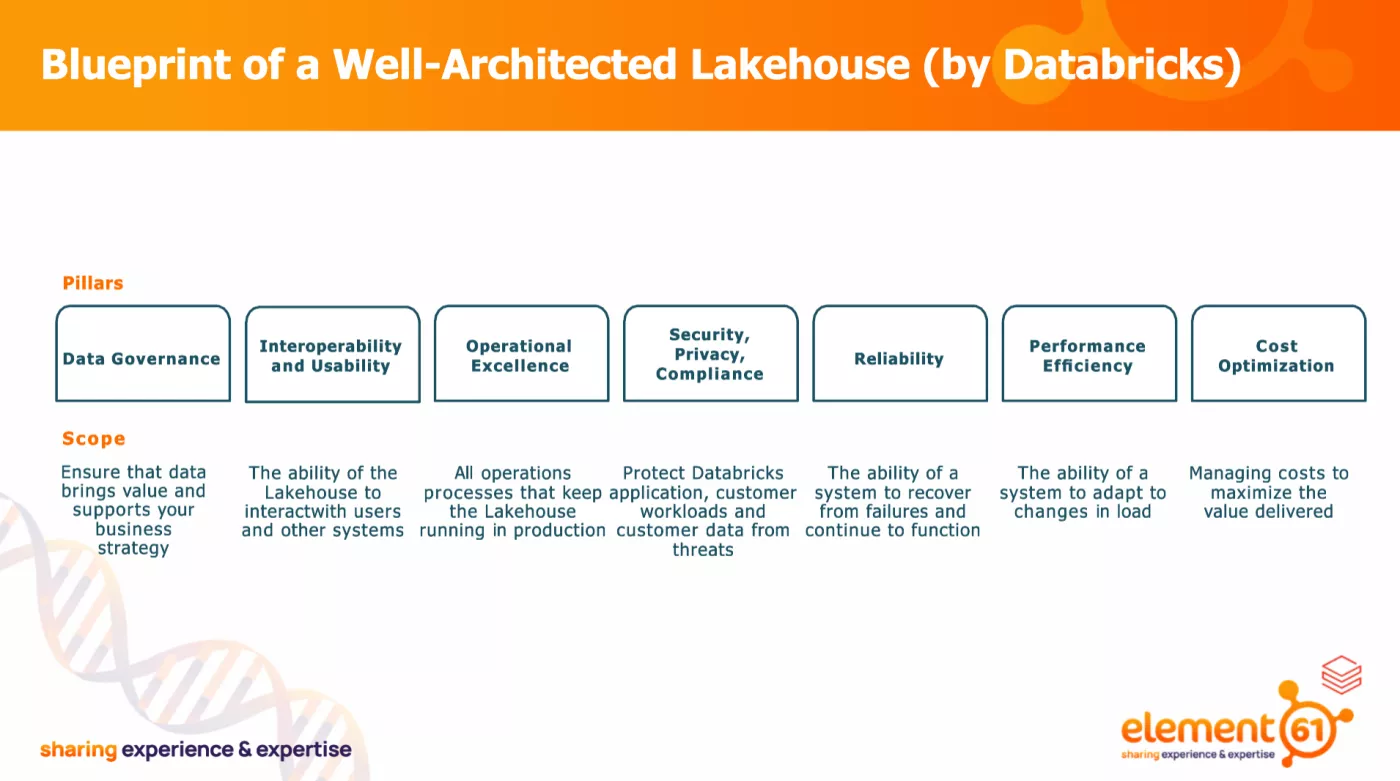

A well-architected Lakehouse is a data platform that combines the best of both worlds: the scalability and flexibility of a data lake and the reliability and performance of a data warehouse. By following the principles of operational excellence, security, reliability, performance efficiency, cost optimization, and data governance, you can ensure that your data platform is able to handle the growing volume, variety, and velocity of data and support the diverse needs of your data consumers and producers.

At element61, we have the expertise and experience to help you adopt and implement the best practices for Databricks in your organization.

- We can help you with analyzing your current data platform and identifying the gaps and opportunities for improvement. We can assess your data architecture, its running costs, its performance, its load times, the implemented data security, the data processing, and the enablement for end-user use. Once analyzed, we provide you with a roadmap and concrete things to improve in your data platform.

- We can help you with auditing and reviewing your current Databricks workloads and configurations and providing recommendations for optimization and performance. Too often Databricks jobs are put in production without take performance, costs & reliability all into account. We can evaluate your Databricks notebooks, jobs, clusters, security groups, networks, and storage and suggest ways to improve your performance, scalability, reliability, security, and cost efficiency. We can also help you comply with the relevant regulations and standards for your data, such as GDPR, HIPAA, SOC2, and ISO27001.

- We can help with coaching and training your team on how to use Databricks more effectively and efficiently for various use cases, such as data engineering, data warehousing, data science, and machine learning. We can provide you with hands-on workshops but also guided certification programs to help you master the Databricks platform and its features, such as Databricks SQL, Delta Lake, MLflow, and Mosaic. We can also help you adopt the best practices for code development, testing, deployment, and monitoring using Databricks.

- We can help with implementing the best practices and recommendations for Databricks in your data platform, such as using serverless services, tuning performance, configuring Unity Catalog, organizing Delta Live Tables, benefiting from Databricks IQ, and beyond. We can help you leverage the latest and most advanced capabilities of Databricks to simplify your data management, accelerate your data processing, enhance your data quality, automate your data governance, and empower your data innovation.

By partnering with element61, you can leverage the full potential of Databricks and transform your data into valuable insights and solutions for your business. Contact us today to find out more about how we can help you with Databricks best practices.