![]()

element61 is a certified Databricks partner and offers end-to-end support in the set-up, use and training of Databricks.

What is Databricks?

Databricks was founded at UC Berkeley AMPLab by the team that created Apache Spark, a cluster-computing framework now commonly used for big data processing and AI (and alternative to a Hadoop/MapReduce system)

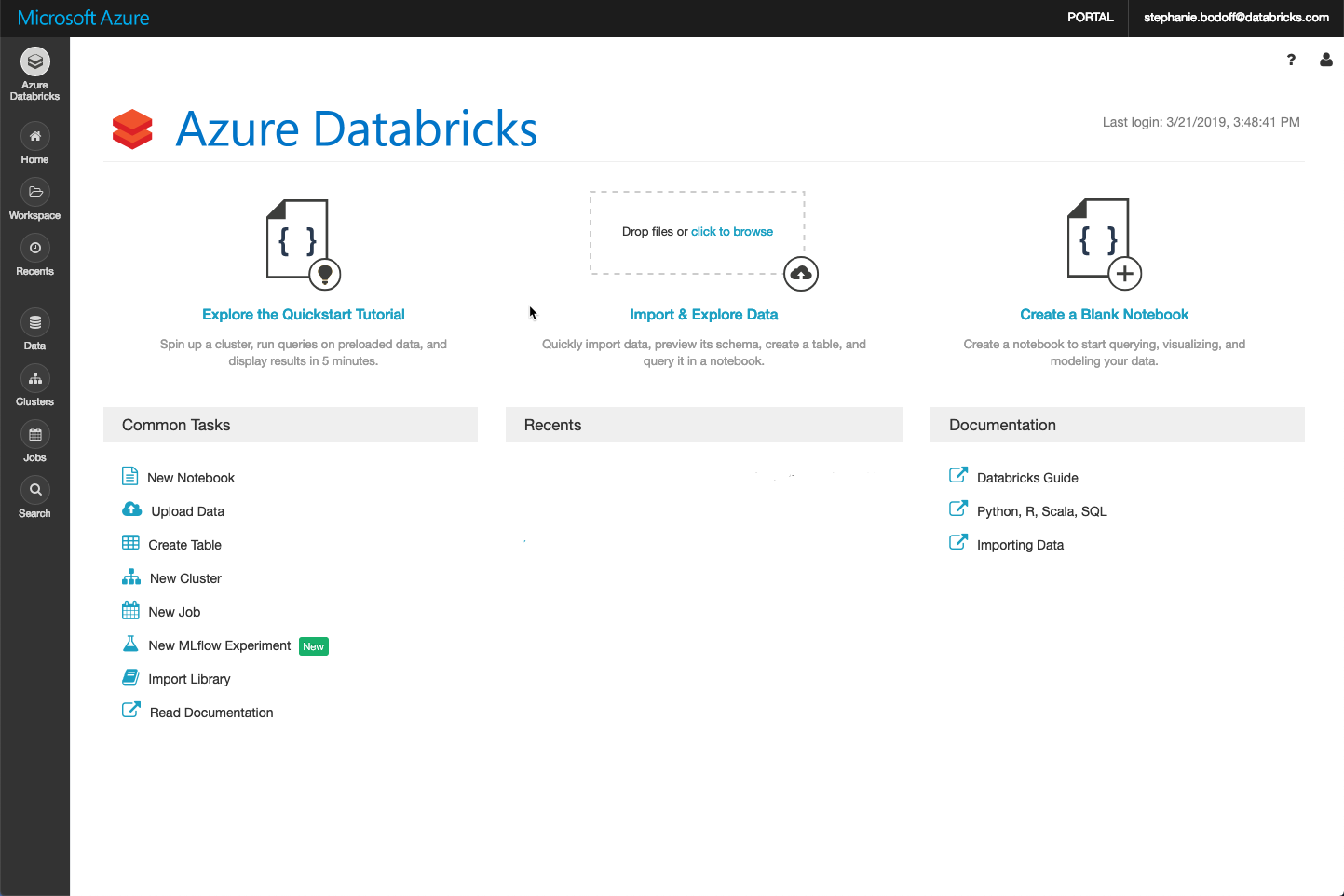

Databricks offers an integrated platform simplifying working with Apache Spark. Through an integrated workspace, users of Databricks can collaborate with Notebooks, set up shared data integrations and clusters and align Databricks within IT security and governance procedures (e.g. active directory integration, virtual network security, access rules, etc.). Within the Databricks platform, Data engineers and Data Scientists can set up real-time data pipeline, run data mining, schedule data jobs and much more.

Why Databricks?

Setting up an integrated platform for data scientists and data engineers to collaborate is tough. Although a lot of organizations start with Data Science development locally on their laptop or a VM, organizations who embrace the power of AI will need at a certain time both more compute power as well as the ability to truly collaborate among teams. Databricks is a hassle-free platform offering both IT as well as data users (engineers and scientists) a top-notch platform.

A Unified Analytics Platform

Databricks offers a unified analytics platform: starting from web based notebooks running on managed clusters, the platform is constantly evolving ever since to offer a unified experience for running scalable data engineering and data science in the cloud.

Key functionalities of Databricks

Power of Apache Spark

Databricks is built on top of Apache Spark, an open source distributed computing framework which orchestrates that computations are dispersed over a cluster of computers. As data volumes are growing and computations need to go faster and faster, organizations need cluster-competing to guarantee that a data job (or model training) is done timely. Spark is today the most-used cluster-compute framework for both real-time, big data and AI.

Managed Clusters

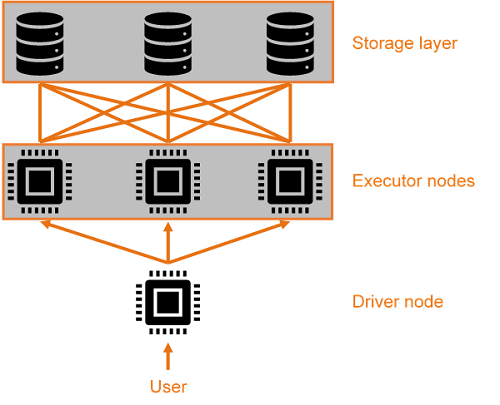

Clusters in Spark consist of a driver node and -exceptions aside- one or more executor nodes. The driver distributes the tasks over the different executors and handles communication.

Configuring clusters, scaling the number of nodes up or down according to required capacity and ensuring these clusters stay up during heavy loads are non-trivial tasks. Databricks excels in tackling this complexity and providing the developer with an environment that is prepared for data wrangling. When running a cluster, the logs and Spark UI are also made available within the Databricks platform for debugging or optimizations.

If necessary, the default Spark clusters can be further tweaked by the user to add features such as autoscaling, automatic termination, initialization scripts, open source or customer libraries.

Running on Azure

Databricks can run on either Azure or AWS. Partnering with both worldwide cloud vendors and their broad set of data centers, Databricks can offer its Unified Analytics Platform globablly. Azure Databricks is a Databricks environment running on Azure compute and network infrastructure. As users leverage Active Directory Integration, Pass-through security, Infrastructure-as-code and many more Azure integrated features, end-users experience it as a native Azure service offering a full Apache Spark platform (and more).

Collaborative Notebooks

Databricks is built around the concept of a notebook for writing code. Notebooks allow developers to combine code with graphs, markdown text and even pictures. In terms of programming languages, Databricks supports Python, Scala, R and SQL.

The advantages of notebooks are manifold. Firstly, notebooks are a great environment for embedding an analysis in a more comprehensive story by augmenting the code with the available graphs & explanatory text. Secondly, working in notebooks makes it easy to collaborate with a team. Lastly, Databricks also offers the possibility of including widgets in the notebooks that can be used for input parameters. Using these widgets, one can start from a notebook and tweak the analysis according to his or her own assumptions.

On-demand Spark Jobs

Databricks makes it possible to run workloads as ‘jobs’, both on demand or according to a defined schedule. At this point, there are four types of jobs: notebooks, spark jars, spark python or spark submit.

Although notebooks are great for the aforementioned explorations and collaborations, their flexibility and free form might turn into a burden for brining finalized jobs to productions. In this case, submitting jars or files can be a more robust workflow. The different kinds of jobs can be created and scheduled using the comprehensive user interface or with API calls.

DevOps integration

Databricks is fully support DevOps: through integration with Git Databricks supports versioning of Notebooks, through Azure's ARM-templates Databricks supports infrastructure-as-code. element61 has set up a best-practice set-up to continuously integrate and deploy Databricks notebooks and jobs across different development and production environments.

Real-time and Batch

Supported from Spark, Databricks can support data users in both real-time pipelines (using Delta or Spark Streaming) or batch data jobs.

A real-time pipeline would require a Databricks cluster to be 'always on' yet through the features of autoscaling up & down it can efficiently manage both peaks and low-demand periods. Databricks users can, leveraging open-source Spark, use the framework of their choice including Spark Streaming, Spark Structured Streaming or Delta. Contact us if you want to know more about the benefits of either frameworks.

Interested? Read more!

Want to know more about Databricks and how Databricks helps ML and BI with e.g. its Databricks Delta feature; continue reading about:

- Databricks on Azure (Azure Databricks)

- Databricks Delta

- Machine Learning on Databricks

- BI on Databricks

We can help!

element61 has extensive experience in Machine Learning and Databricks. Contact us for coaching, trainings, advice and implementation.