One of the core building blocks of Databricks is the Lakehouse architecture, which combines the best of data lakes and data warehouses. Combining the Lakehouse architecture with the enablement on (Gen) AI makes Databricks capable to offer the Databricks Data Intelligence Platform.

The Databricks Data Intelligence Platform enables companies to store all their data in a single, open, and reliable format, while also supporting diverse analytics workloads such as BI, data science, AI, machine learning, and streaming. Databricks does this by providing a unified platform for managing and accessing the Lakehouse, with various components that address different needs and use cases.

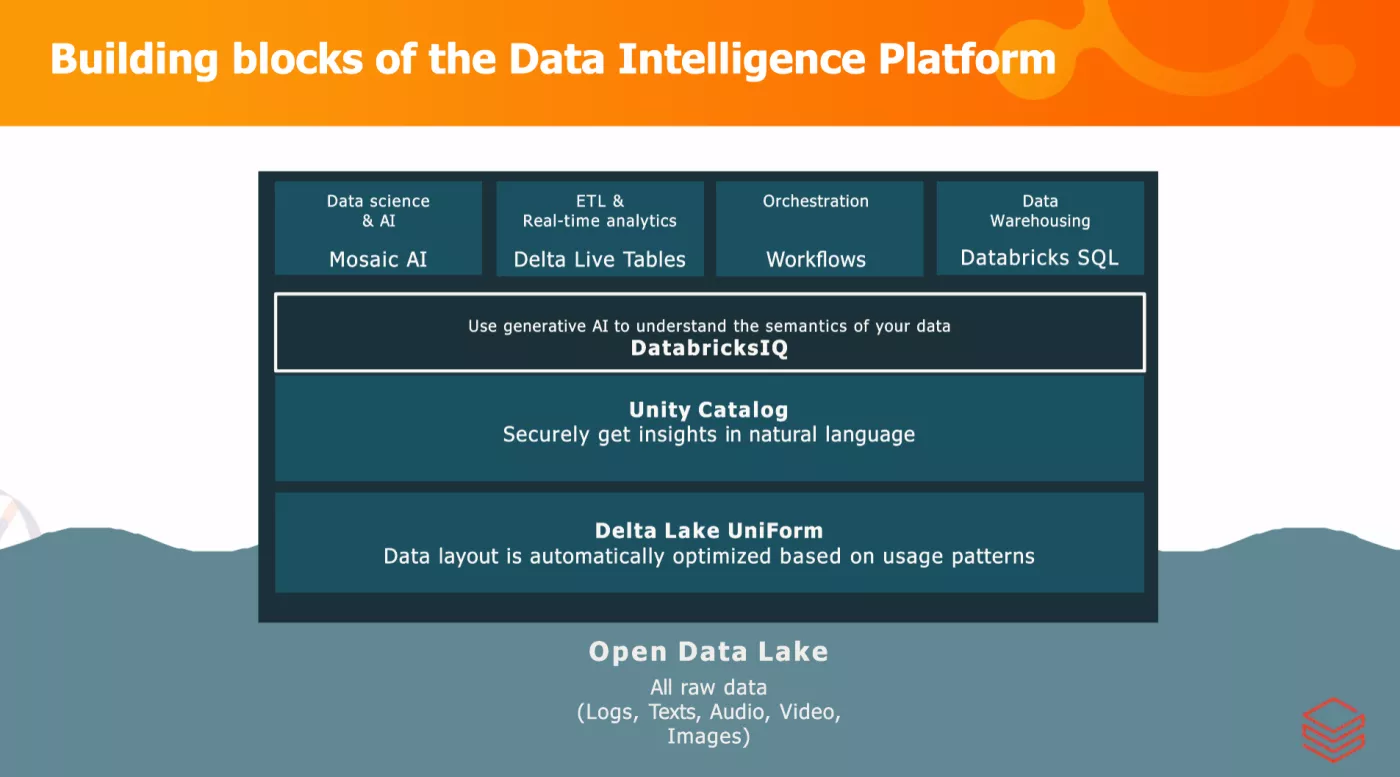

Some of the key enabling components of the Databricks Data Intelligence Platform are:

- Open Data Lake: Databricks supports all types of incl. raw data being text, video, audio & beyond. Databricks also supports all formats of data as it builds – being a Lakehouse - on a Data Lake.

- Delta Lake UniForm: On top of your open Data Lake, Databricks leverages Delta Lake, an open-source storage layer that brings ACID transactions, schema enforcement, and time travel to data lakes. Delta Lake enables users to store all their raw data, including structured, semi-structured, and unstructured data, in a single place, without compromising on (& even boosting) performance, reliability, or scalability. With Delta Lake with UniForm, your data is even opened up automatically for other Lakehouse formats. Databricks prepares the meta-data needed so that other tools like BigQuery, Snowflake, Fabric & beyond can easily connect your Delta Lake data.

- Unity Catalog is a service that allows you to govern all this data. With Unity Catalog (often abbreviated to UC) you have unified visibility, access control, lineage, discovery, monitoring, and data sharing for all the data and AI assets in your Lakehouse. This includes tables, files, models, notebooks, and dashboards. With Unity Catalog (an open-source-enabled framework) all your data & assets are governed

- With DatabricksIQ, Databricks makes all this data speak & allows you to interact better with your data. Using generative AI, you can use Databricks IQ to interact & understand the semantics of your data. One of its capabilities in Databricks Assistant a chatbot on your own data.

With these enablers, Databricks has a solid foundation to enable the use of your data. This they do for various personas:

- Databricks supports Data Warehousing for BI & Business users through their Databricks SQL interface. Databricks SQL is a service that provides fast and easy access to data in the Lakehouse using familiar SQL queries and dashboards. Databricks SQL leverages the Delta engine, a vectorized query engine that can process large amounts of data in parallel, and Photon, a native execution engine that can run queries up to 20 times faster than Spark. Databricks SQL also supports text-to-SQL, a natural language interface that allows users to ask questions and get answers from their data in plain English.

- Databricks supports Data Scientists & ML Engineers through their Machine Learning & AI Platform: Databricks offers a rich set of tools and frameworks for data science and AI, including Databricks workspaces, MLflow, AutoML and Mosaic AI. Databricks Workspace is a collaborative environment that allows also data scientists to create and share notebooks, run experiments, and deploy models using various languages and libraries. Databricks MLflow is a MLOps structure and suite of services that automate and simplify the machine learning lifecycle, from data preparation and feature engineering (e.g. feature store), to model training and tuning, to model deployment (e.g. serving endpoints) and monitoring. Mosaic AI is a generative AI platform that enables users to create, fine-tune, and serve custom large language models (LLMs) for various natural language tasks, such as text summarization, sentiment analysis, and code generation.

- Databricks supports Data Engineering, orchestration and ETL for Data Engineers through several options available for orchestrating and transforming data in the Lakehouse, such as Databricks Workflows, Delta Live Tables, and Databricks Jobs. Databricks Workflows is a service that allows users to create and manage workflows that combine multiple steps, such as data ingestion, transformation, analysis, and visualization, in a single pipeline. Delta Live Tables is a framework that simplifies the development and operation of streaming applications, by automatically handling data quality, schema evolution, and scaling. Databricks Jobs is a service that allows users to schedule and run jobs on the Databricks cluster, with features such as job retries, alerts, and cost optimization. With Databricks Serverless Compute they even make sure hassle-free and provide hands-off and auto-optimized compute resources for serverless workloads, such as Databricks SQL, Workflows, and Delta Live Tables, with features such as fast startup, auto-scaling, auto-termination, and cost governance.

While the above is a comprehensive overview of the main building blocks of Databricks they are continuously features added. By using Databricks, users can benefit from the flexibility, scalability, and openness of the Lakehouse architecture, while also enjoying the performance, reliability, and usability of the various services and components that Databricks offers.

We can help!

element61 has extensive experience in Databricks. Contact us for coaching, training, advice and implementation.